Why Prompts Are Dead: Building Modular AI with Agent Skills

The Problem with "The Giant Prompt"

As I build more complex workflows for my freelance clients, I keep hitting a wall: Context Limits. Trying to stuff every API rule, database schema, and style guide into one massive system prompt is expensive and confusing for the model.

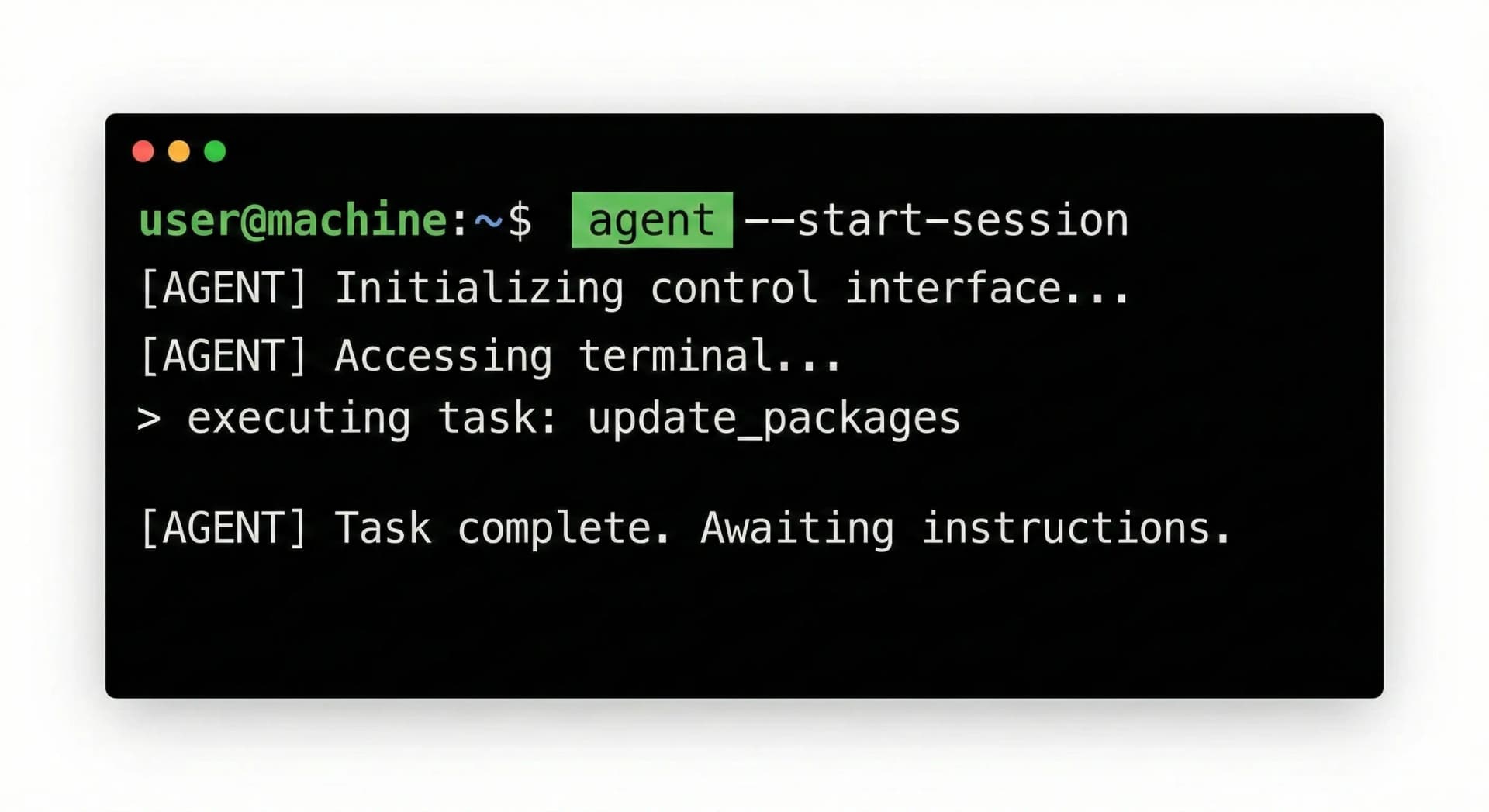

Recently, I shifted my workflow to use Agent Skills, a modular architecture that treats AI capabilities like software libraries rather than text instructions.

The "Progressive Disclosure" Architecture

The most powerful concept here is Progressive Disclosure. Instead of dumping 50 pages of documentation into the AI's context window immediately, an Agent Skill loads in three levels:

- Level 1 (Metadata): Claude sees a YAML header saying "I know how to query the Supabase database." (Cost: ~100 tokens).

- Level 2 (Instructions): Only when I ask for data does Claude read the

SKILL.mdfile to understand how to write the query. - Level 3 (Resources): If the query is complex, Claude accesses a specific

SCHEMA.mdor executes a Python script in the skill's folder.

Building My "Project Onboarding" Skill

I created a Custom Skill for my freelance projects. It's a directory structure that looks like this:

my-project-skill/

├── SKILL.md # "How to deploy to this client's server"

├── INFRASTRUCTURE.md # AWS/Supabase configs (Loaded only on demand)

└── scripts/

└── health_check.pyNow, when I open Claude Code, I don't have to explain the project history. I just say "Deploy to staging," and the Agent Skill triggers, reads the specific deployment guide from the filesystem, and executes the script.

Why This Matters

This is the difference between an AI that chats and an AI that works. By using filesystem access and bash execution, I'm not just getting text back; I'm getting deterministic operations (via scripts) wrapped in flexible intelligence.